Problem statement

Many organizations try to achieve higher development release velocity, especially in a competitive environment. Moreover, in a big team, where many developers work on various features in parallel, release process acceleration (even for a few minutes) saves a considerable amount of time.

One more important thing is the cost of the environment. Cloud allows us to provision virtually unlimited resources and have as many environments as we need, but we should be careful and terminate everything that is not required anymore to keep costs to a minimum.

Solution overview

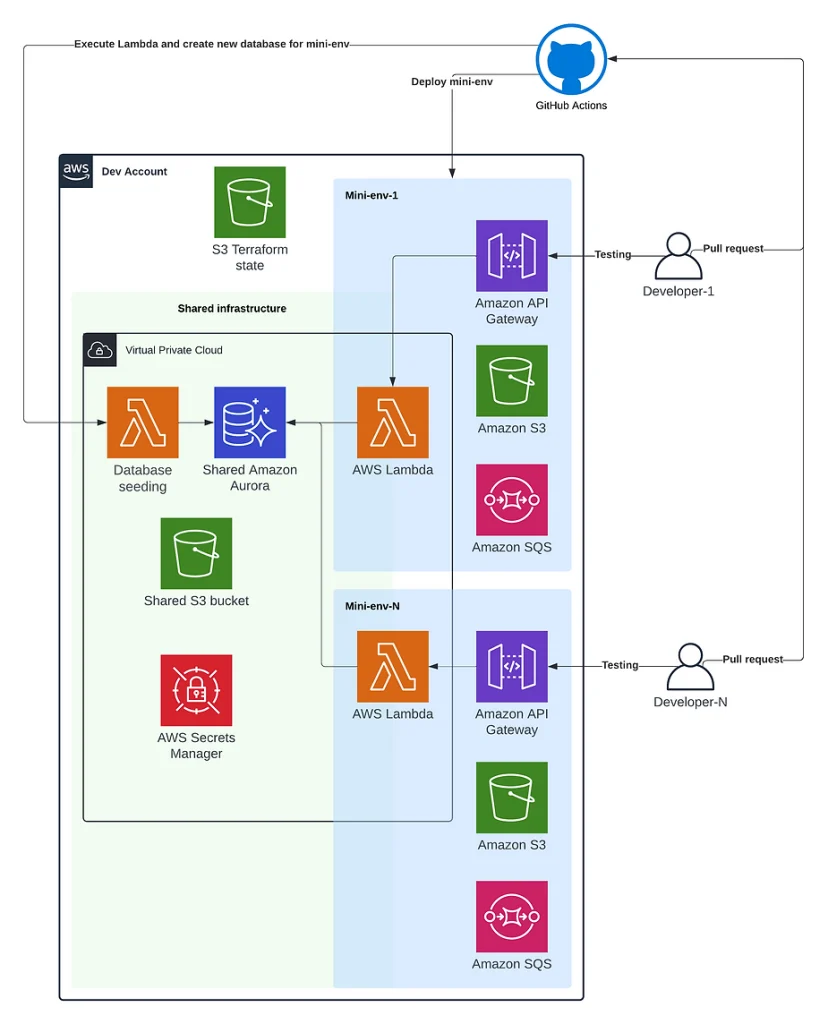

The application is a “classic” serverless, containing many Lambda functions, API Gateway with various resources and methods, SQS queues, and S3 buckets.

The first requirement was to use the same tools as was before:

– Terraform

– Terragrunt

– GitHub Actions

The second requirement is to deploy as fast as possible. Here we need to think about the time that every AWS resource takes to be provisioned and cross-resource dependencies because some resources obviously can not be deployed in parallel. Amazon Aurora required existing VPC, Lambda functions, in this case, depend on VPC and Aurora. VPC (3-4 minutes) and Aurora (10-15 minutes) are the most time-consuming resources in provisioning via Terraform.

The third requirement is to provision a new “ephemeral environment” for every pull request into the “master” branch and terminate it once the pull request is closed.

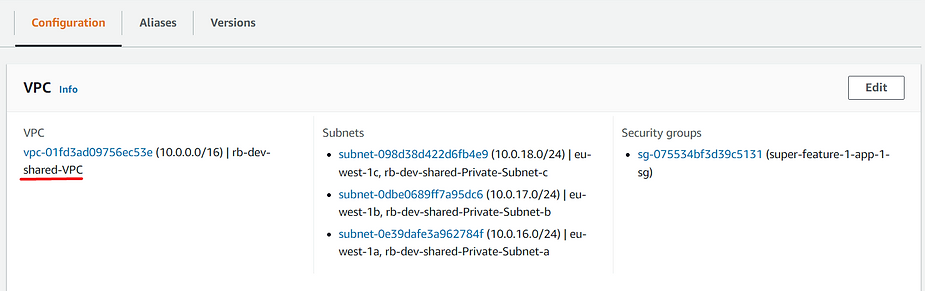

In the initial design, we decided to split all resources logically into “shared” and “personal”. The shared environment contains the most expensive and time-consuming resources such as VPC and Aurora, plus a common S3 bucket and Secrets Manager (storing Aurora secrets and more). The personal environment consists of Lambda functions because their code is continuously changing in every pull request, API Gateway, because every developer needs his/her personal endpoint for testing, and SQS/S3 that doesn’t cost a lot and can be created and removed pretty fast. Lambdas will be deployed into shared VPC and connect to the shared Aurora cluster with credentials from Secrets Manager.

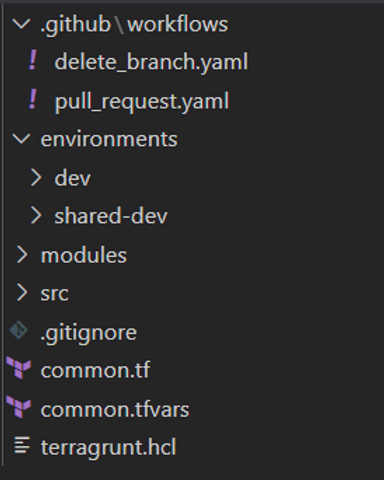

A structure of directories in the Github repository is as follows:

– .github/workflows/ contains pipelines definition for GitHub Actions.

– environments/shared-dev/ contains terraform files that call modules for VPC and Aurora.

– environments/dev/ contains terraform files that call modules for serverless applications.

– modules/ contains all terraform modules.

– src/ contains packages for serverless applications that appear there after CI steps.

– terragrunt.hlc file is the core of logic because it allows generating TF state dynamically and independently for every ephemeral environment. This file is included from shared-dev and dev.

Here is an example of pipelines for environments deploy and destroy:

name: Pull Request

on:

pull_request:

branches: [ master ]

permissions:

id-token: write

contents: read

jobs:

pull_request:

runs-on: ubuntu-latest

env:

AWS_DEFAULT_REGION: us-east-1

steps:

- name: Install Terraform

uses: hashicorp/setup-terraform@v1

with:

terraform_version: 1.1.2

- name: Install Terragrunt

uses: supplypike/setup-bin@v1

with:

uri: 'https://github.com/gruntwork-io/terragrunt/releases/download/v0.35.16/terragrunt_linux_amd64'

name: 'terragrunt'

version: '0.35.16'

- name: Configure aws credentials

uses: aws-actions/configure-aws-credentials@v1

with:

role-to-assume: arn:aws:iam::1**********0:role/deploy-role

role-session-name: deploysession

aws-region: ${{env.AWS_DEFAULT_REGION}}

- name: Checkout

uses: actions/checkout@v2

- name: Build mini-environment

env:

ENV_NAME: ${{ github.head_ref }}

USER_NAME: ${{ github.actor }}

PR_NUMBER: ${{ github.event.number }}

# COMMIT_MESSAGE: ${{ github.event.pull_request.title }}

run: |

cd environments/dev

terragrunt apply -auto-approve

Steps for installation of Terraform and Terragrunt will be cached by Github actions and will not take time in every execution.

Configuring Github Actions with IAM roles is described in the previous post.

ENV_NAME is an important environment variable, that is used for the creation and identification of appropriate Terraform state for every ephemeral environment.

USER_NAME and PR_NUMBER (COMMIT_MESSAGE is also possible) will be added to AWS resource tags for better visibility.

name: Destroy mini-env when a branch was deleted

on:

pull_request:

types: [closed]

permissions:

id-token: write

contents: read

jobs:

destroy_mini_env:

runs-on: ubuntu-latest

steps:

- name: Install Terraform

uses: hashicorp/setup-terraform@v1

with:

terraform_version: 1.1.2

- name: Install Terragrunt

uses: supplypike/setup-bin@v1

with:

uri: 'https://github.com/gruntwork-io/terragrunt/releases/download/v0.35.16/terragrunt_linux_amd64'

name: 'terragrunt'

version: '0.35.16'

- name: Checkout

uses: actions/checkout@v2

- name: Configure aws credentials

uses: aws-actions/configure-aws-credentials@v1

with:

role-to-assume: arn:aws:iam::1**********0:role/deploy-role

role-session-name: destroysession

aws-region: us-east-1

- name: Destroy mini-environment

env:

ENV_NAME: ${{ github.head_ref }}

USER_NAME: ${{ github.actor }}

PR_NUMBER: ${{ github.run_number }}

run: |

cd environments/dev

terragrunt destroy -auto-approve

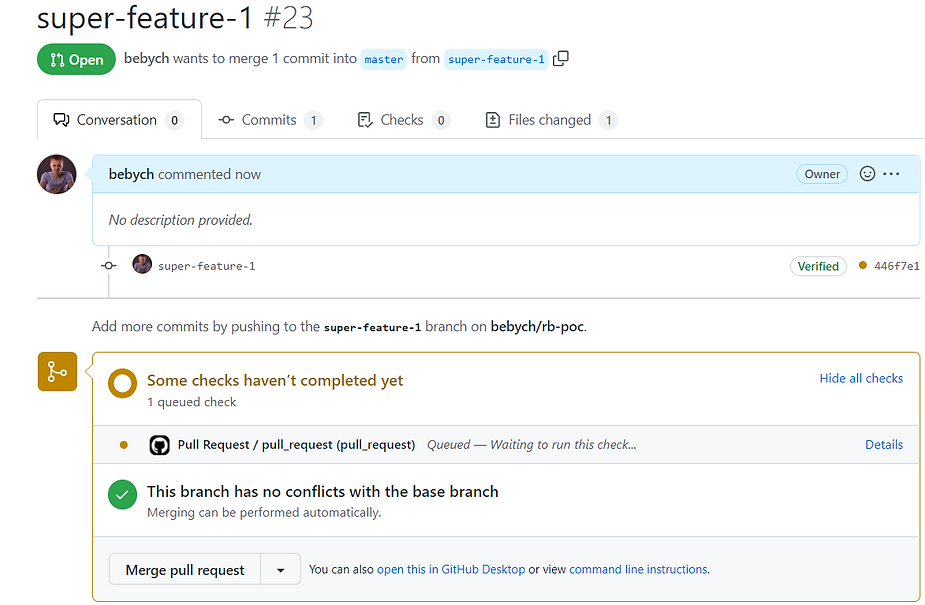

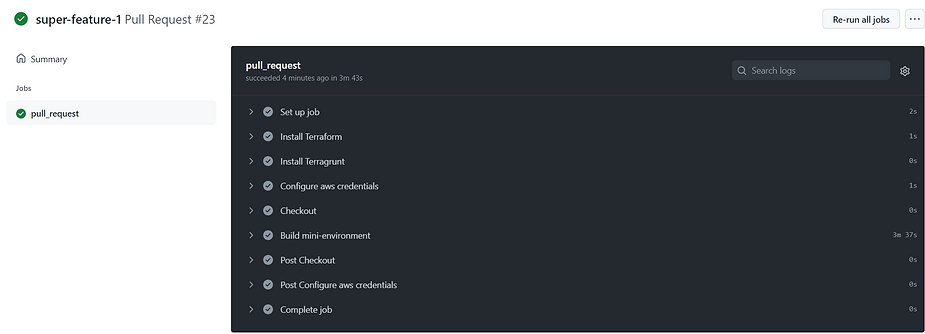

Once the pull request into the “master” branch is created, the pipeline starts.

Once it is completed, we can check the results.

terragrunt.hcl file contains the section as follows:

### Remote state S3/DynamoDB configuration

remote_state {

backend = "s3"

config = {

region = local.tfstate_region

bucket = local.tfstate_bucket

key = "${path_relative_to_include()}/${get_env("ENV_NAME")}/terraform.tfstate"

encrypt = true

dynamodb_table = local.tfstate_table

}

}

It takes “ENV_NAME” and puts a new Terraform state into a folder with such a name.

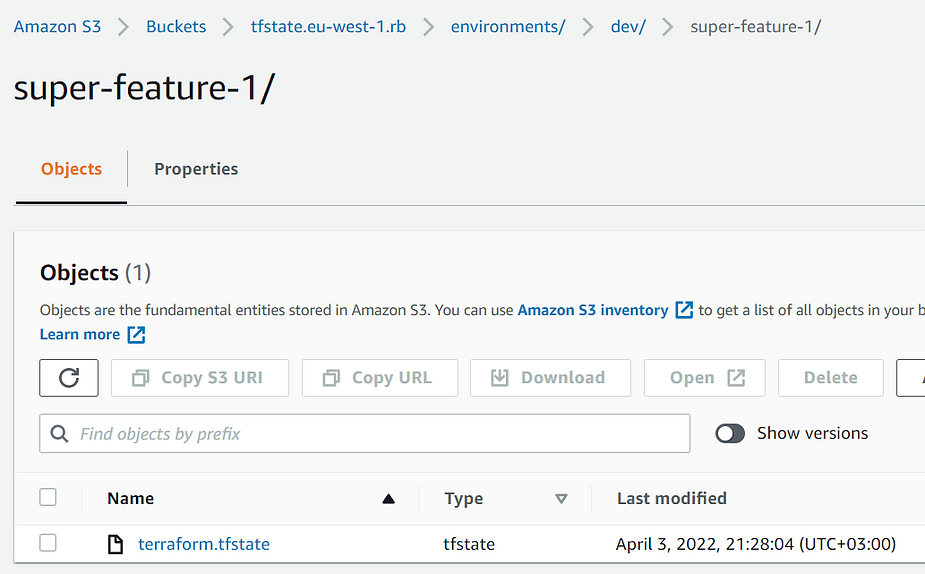

The new state file has been created:

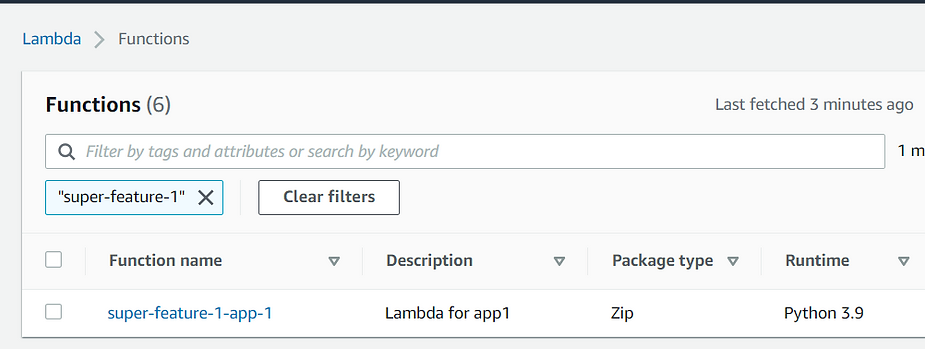

The application has been deployed:

Every ephemeral environment gets required values from Tarrafrom state file of “Shared” environment:

### Data sources

# Shared env State

data "terraform_remote_state" "shared" {

backend = "s3"

config = {

region = var.tfstate_region

bucket = var.tfstate_bucket

key = "environments/shared-dev/shared/terraform.tfstate"

}

}

The new Lambda has been deployed into shared VPC:

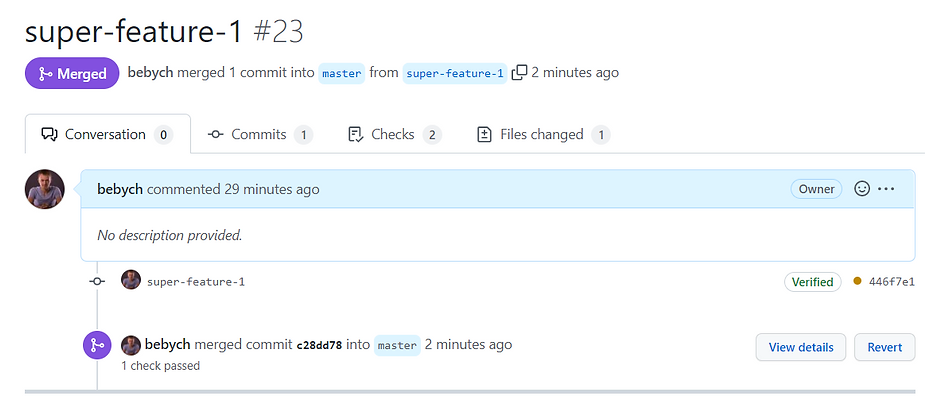

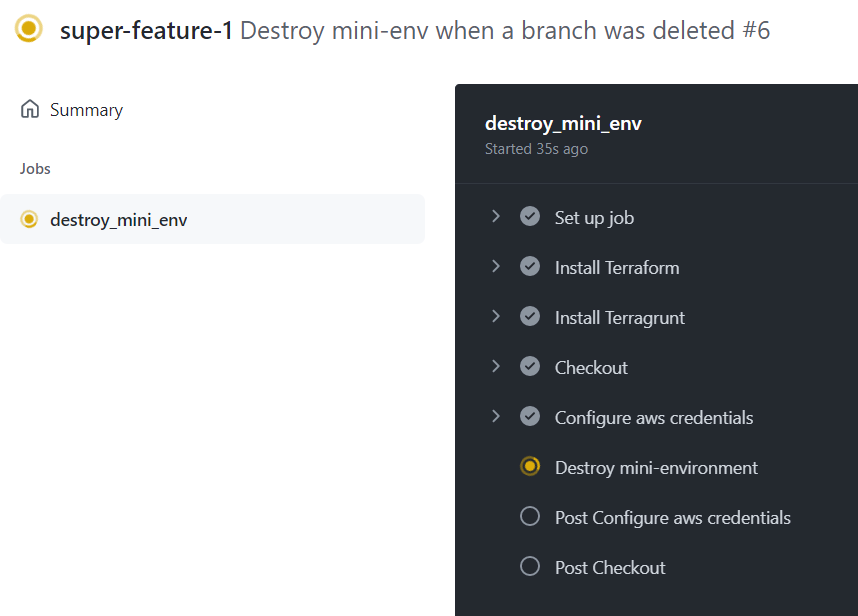

Once the pull request is merged (or discarded), the pipeline for environment removal is started:

Conclusion

AWS Cloud and Infrastructure as Code tool like Terraform allows us to provision and destroy ephemeral environments quickly and conveniently. Such an approach helps us increase a release velocity, allows many developers to work on different features in parallel, minimizes costs by using only resources that we need, and removes others. Terragrunt provides us with the ability to dynamically generate a dedicated TF state file for every environment and keep them independent. A CI/CD platform like Github actions organizes all steps into a unified and automated workflow according to the DevOps best practices.